What we learned from Graphite and Superblocks at our recent Work-Bench NY Enterprise Tech Meetup - on chasing PMF, designing for AI-first users, and evolving fast.

AI isn’t just moving fast. It’s redefining what it means to build, sell, and operate - all at once.

And in this new reality, the question has often become:

Did your product launch before ChatGPT, or after?

That line now separates companies that:

- Have products that feel AI-native from ones that feel dated

- Empower customers with true automation, not just faster workflows

- Can adapt their tech stack as models evolve (without breaking things)

- Have GTM strategies that still work in a fast-moving AI market

These aren’t differentiators anymore. In fact, they’re table stakes in today’s AI-everything era. But that doesn’t mean it’s too late. Even enterprise startups that launched long before ChatGPT can keep up, if they’re willing to rethink their playbooks and evolve beyond what’s comfortable.

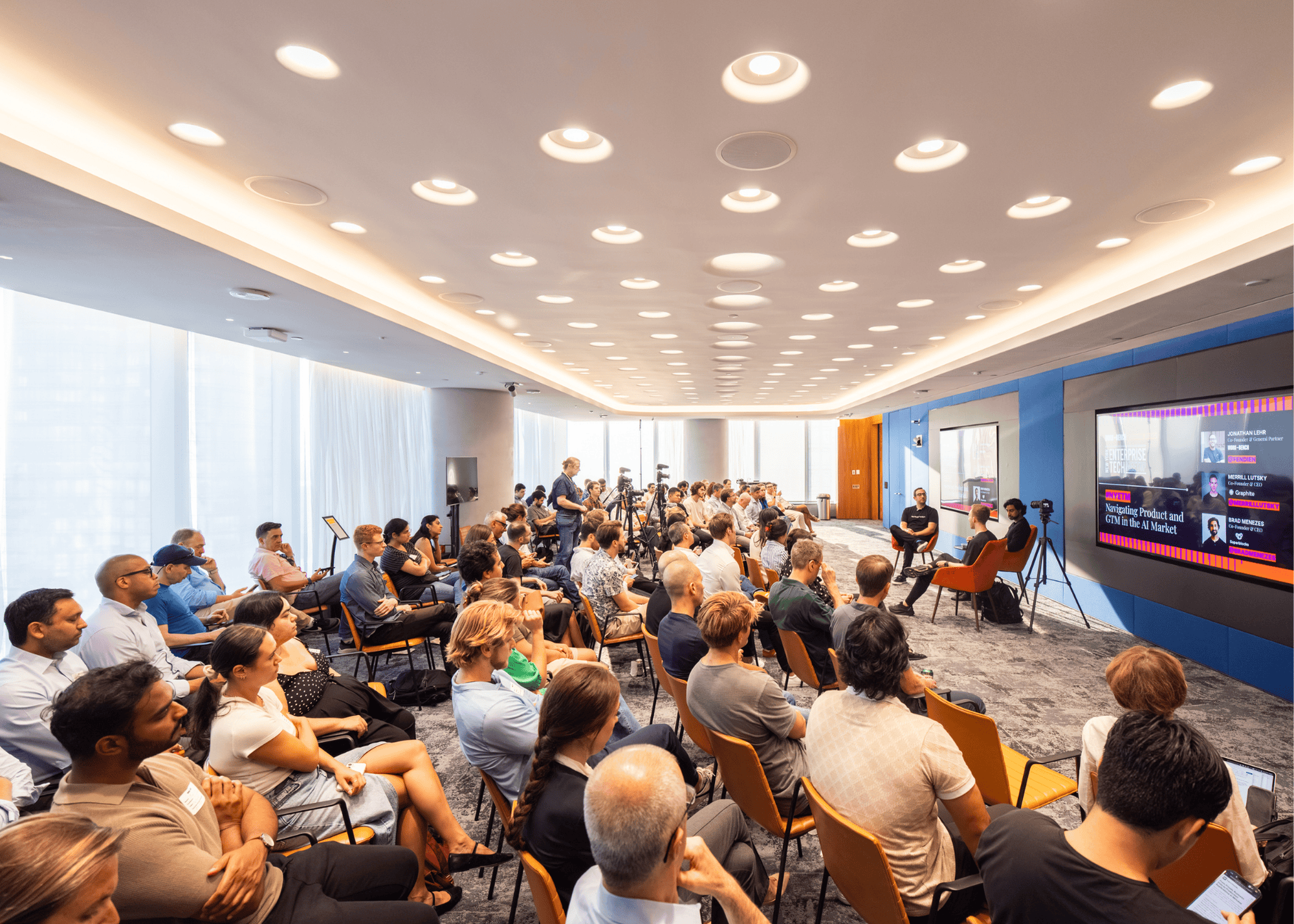

That’s why we brought together two founders who are tackling these shifts head-on at our July Work-Bench Enterprise Tech Meetup (NYETM), hosted at the Wells Fargo offices in Hudson Yards:

- Merrill Lutsky is the Co-founder & CEO of Graphite, an AI-native code review platform that has raised $81M in total funding. Graphite helps engineering teams ship faster by combining stacked diffs with AI-powered code review. The product is used by engineering teams at Ramp, Shopify, Figma, and Cursor, and is backed by a16z and Accel, among others.

- Brad Menezes is the Co-founder & CEO of Superblocks, a platform for building AI-native internal tools, with $60M in total funding.The product powers engineering teams at Cockroach Labs, Instacart, and Capsule, and is backed by Kleiner Perkins, Spark Capital, and others.

Their stories offer a snapshot of what it actually looks like to adapt, not just to AI as a capability, but to the new pace, structure, and pressure it’s introduced across the stack.

Below, we dive into 6 key takeaways from the conversation.

PMF is a moving target.

In the AI era, product-market fit isn’t something you achieve once. It’s something you constantly have to revalidate - sometimes every quarter (or more, depending).

Superblocks launched four years ago as a low-code platform for internal apps, with strong traction and clear PMF. But that changed fast once “vibe coding” tools took off.

Platforms like Replit, Lovable, and Bolt introduced new, more fluid coding experiences. Customers spoke favorably of experiences with these tools, and that feedback triggered a complete reset in an effort to become an “enterprise vibe coding” company.

“We had to go all in…we burned the boats,” Brad said.

The change wasn’t just about UI, rather, it was about who could build. Semi-technical users now had access to a new kind of capability:

“Coding is like a ChatGPT moment. It’s the iPhone moment for them. They’ve never been able to build, and now they can,” said Brad. He called it a “magical moment,” one where a previously blocked user could now do something they’d never been able to do before. That insight reframed the product, the user, and the opportunity.

Graphite didn’t face a PMF reset, but AI still shifted the market. Merrill described how demand expanded rapidly as smaller teams began functioning like much larger ones.

“The window of companies that were interested in a tool for accelerating code review… has now just shifted massively,” he said.

TL;DR: AI doesn’t just change what your product does, it changes what customers expect, who your users are, and how fast you need to move. For Superblocks, that meant a full rebuild. For Graphite, it meant faster expansion. Either way, PMF doesn’t hold, it shifts…and sometimes more quickly than you expect.

Your next user might be an AI agent

Superblocks didn’t just layer AI on top of their product, they re-architected it to treat AI as a core user. That meant ripping out its DSL and rebuilding the engine in actual code which LLMs could understand, execute, and interact with.

“We actually are designing for an AI Agent as a core user of the product,” Brad said.

But the real changes happened under the hood. The AI had to function within complex enterprise systems (things like permissions, audit logs, observability, and secure data access) without compromising trust or governance.

That’s where context engineering came in. The team had to build the infrastructure to deliver the right data to the model at the right time, based on user permissions and organizational rules. Without that, the model wouldn't know what it should or shouldn't see, and any output it generated could be useless and/or dangerous.

Graphite took a more incremental path. Its AI code reviewer started as a side project, with early versions that were noisy and unreliable. “At first it was just an IP implementation…every now and then [it would turn on] and everybody would complain and we’d have to shut it down,” said Merrill.

GPT-3.5 was the turning point. It was the first time the model worked well enough where “there wasn’t an immediate tomato throwing,” said Merrill. Once the model quality improved, the team started treating AI as a real part of the product’s future, not just an experiment, eventually scaling the effort as demand grew.

TL;DR: If AI is going to do real work, it needs to be treated like a real user. That changes how you build - not just what the product does, but how it works behind the scenes. You need to control what the model sees, what it’s allowed to do, and how it behaves, just like you would with a human user.

You can’t outsource product intuition

Both founders are still deep in the work - not just reviewing strategy but taking user calls, talking to engineers, and recruiting talent themselves.

Brad has done away with one-on-ones and weekly meetings. “My schedule is basically I do customer calls back to back in the morning and afternoon. Then I spend the late afternoon or early evening with engineering. And then late evening is recruiting,” he said.

Merrill’s focus is a little different: Stepping back regularly to question whether the roadmap still makes sense. He says “it takes a conscious effort to pause and say, okay, are we actually building the right thing? What is our view on how this space is going to evolve?”

TL;DR: Either way, the common thread is that you can't delegate product judgment in this environment. You have to live in it to understand it. Strategy moves too fast to hand it off and founders still need to do the work, whether it’s customer calls, product reviews, or making hiring decisions.

Learning AI beats hiring AI, for many startups

Both founders agreed that you don’t need a team of PhDs to build strong AI products at the application layer. What matters more is having a team that’s curious, has time to experiment, and can adapt as the model landscape shifts.

“If you’re building an application layer, I think a lot of it can be learned,” said Brad. “You just have to give the team space to play around with it and develop the competency,” Merrill said.

Brad added, after also mentioning that it’s challenging to find someone right now with 5 years experience in LLM:

“I don’t think most startups can afford AI researchers. When Meta’s paying hundreds of millions of dollars for an AI researcher, even one that’s maybe a very junior AI researcher is still going to be unaffordable.”

TL;DR: You don't need to hire AI experts to build great AI products. Both founders learned by doing, and say the real advantage comes from giving strong engineers time to experiment, explore, and adapt. Most of what matters at the application layer (things like prompting, model choice, and how to deliver the right data to the model) can be picked up on the job.

Build for your skeptics, not just your AI ‘maximalists’

One of the most useful internal product barometers was whether their most skeptical teammates actually liked what they built.

“Can we make even the most skeptical engineer on our team love this product?” asked Merrill.

He said that was the real signal: not internal adoption for adoption’s sake, but genuine buy-in from the people who were least likely to be impressed by a trendy new AI feature. If they’d be disappointed to lose it, the team knew they were on to something.

Brad reflected on how Superblocks started its AI journey with just the true believers: The people who were all-in on LLMs from day one. “What we started with was the people that were AI maximalists… I think that was actually a mistake.”

TL;DR: The best internal feedback doesn’t come from your AI maximalists. It comes from the skeptic, the ones who’ll call it out if it’s slow, clunky, or doesn’t deliver. Broaden internal exposure and give the full team the tools to adopt new workflows, not just the ones already excited about it.

Your implementation strategy is your GTM

AI-first products aren’t automatically self-serve, especially in the enterprise. Shipping features isn’t enough. You have to help customers get to value - and fast.

“It’s all about getting to production. It’s not about prototypes,” said Brad.

This is why Superblocks took a page from Palantir and built a forward deployed engineering team: People who sit inside customer orgs, help them integrate the product into existing workflows, and stay involved until it’s working in production.

“The product is easier to use than ever. But I actually think it’s the most important time to actually add real implementation,” Brad said.

Graphite, meanwhile, focuses on streamlining the early technical setup, from getting the context right to plugging into customer stacks, and making sure the AI shows up in the right places. “It’s more about getting the initial integrations right… and then giving them the tools to go further,” said Merrill.

TL;DR: Across both companies, implementation wasn’t a nice-to-have — it was the difference between a stalled rollout and a successful expansion. Helping customers bridge the gap from “cool demo” to “real value” is core to product and GTM.

If you’d like to stay in the loop on all upcoming Work-Bench NY Enterprise Tech Meetups, drop your email below 👇